MWoffliner is a tool for making a local offline HTML snapshot of any online MediaWiki instance. It goes through all online articles (or a selection if specified) and create the corresponding ZIM file. It has mainly been tested against Wikimedia projects like Wikipedia and Wiktionary --- but it should also work for any recent MediaWiki.

Read CONTRIBUTING.md to know more about MWoffliner development.

User Help is available in the for a a FAQ.

- Scrape with or without image thumbnail

- Scrape with or without audio/video multimedia content

- S3 cache (optional)

- Image size optimiser / Webp converter

- Scrape all articles in namespaces or title list based

- Specify additional/non-main namespaces to scrape

Run mwoffliner --help to get all the possible options.

- *NIX Operating System (GNU/Linux, macOS, ...)

- Redis

- NodeJS version 24 (we support only one single Node.JS version, other versions might work or not)

- Libzim (On GNU/Linux & macOS we automatically download it)

- Various build tools which are probably already installed on your

machine (packages

libjpeg-dev,libglu1,autoconf,automake,gccon Debian/Ubuntu)

... and an online MediaWiki with its API available.

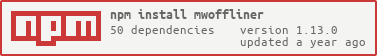

To install latest released MWoffliner version from NPM repo (use -g to install globally, not only in current folder):

npm i -g mwofflinerWarning

Note that you might need to run this command with the sudo command, depending

how your npm / OS is configured. npm permission checking can be a bit annoying for a

newcomer. Please read the documentation carefully if you hit problems: https://docs.npmjs.com/cli/v7/using-npm/scripts#user

Then you can run the scraper:

mwoffliner --helpTo use MWoffliner with a S3 cache, you should provide a S3 URL like this:

--optimisationCacheUrl="https://wasabisys.com/?bucketName=my-bucket&keyId=my-key-id&secretAccessKey=my-sac"If you've retrieved mwoffliner source code (e.g. with a git clone of our repo), you can then install and run it locally (including with your local modifications):

npm i

npm run mwoffliner -- --helpDetailed contribution documentation and guidelines are available.

MWoffliner provides also an API and therefore can be used as a NodeJS library. Here a stub example that could go in your index.mjs file:

import * as mwoffliner from 'mwoffliner';

const parameters = {

mwUrl: "https://es.wikipedia.org",

adminEmail: "[email protected]",

verbose: true,

format: "nopic",

articleList: "./articleList"

};

mwoffliner.execute(parameters); // returns a PromiseComplementary information about MWoffliner:

- MediaWiki software is used by thousands of wikis, the most famous ones being the Wikimedia ones, including Wikipedia.

- MediaWiki is a PHP wiki runtime engine.

- Wikitext is the name of the markup language that MediaWiki uses.

- MediaWiki includes a parser for WikiText into HTML, and this parser creates the HTML pages displayed in your browser.

- Have a look at the scraper functional architecture

GPLv3 or later, see LICENSE for more details.

This project received funding through NGI Zero Core, a fund established by NLnet with financial support from the European Commission's Next Generation Internet program. Learn more at the NLnet project page.